Cart Abandonment Hurting Sales? Use AI to Recover Lost Sales and Boost Conversions 3x!

Late one night, you open your e-commerce dashboard. It’s been a banner day—100,000 users visited your platform, your tech stack ran seamlessly, and your CDP worked overtime, collecting terabytes of data. The recommendation engine hummed along, seamlessly suggesting products to eager shoppers.

But as you dive deeper, excitement turns to concern. 67% of carts were abandoned. You pause and ask yourself, what went wrong in those last critical moments before customers clicked ‘buy’?

If this sounds all too familiar, you’re not alone. Even e-commerce giants face this struggle. ASOS, for instance, showcases an incredible tech setup—AWS auto-scaling, optimized MongoDB clusters, and a Redis cache with an 89% hit ratio. Yet, they still uncovered a 76.8% cart abandonment rate, bleeding $2.3 million daily.

What was the silent culprit? A mere 2.3-second delay in their personalization engine. Just two seconds. That’s all it took to lose millions!

Does this make you think about your system? Your numbers might look impressive, but are unseen bottlenecks quietly driving customers away?

What’s really happening in those final moments? Let’s uncover the truth.

When Seconds Cost Millions: The Hidden Impact of Cart Abandonment

Here’s what’s happening in systems right now: Your rule-based personalization engine, designed to engage users, is struggling with seconds of latency. According to a Google Cloud study, every 100ms of delay costs retailers 1% in conversions. Now, let’s do the math. If your store attracts 100,000 monthly users with an average cart value of $50, even a 1-second delay could cost you $50,000 in lost revenue monthly, and that’s just the tip of the iceberg.

A popular sportswear retailer, Nike, recently faced this exact issue. Their infrastructure included AWS Lambda for scalability, MongoDB Atlas for seamless data management, and a Redis cache with an 85% hit ratio.

Yet, during a high-traffic Black Friday sale, their personalization engine, running on Apache Kafka, couldn’t keep up. With a 2.5-second response time for personalized recommendations, their cart abandonment rate soared to 78%, resulting in $3.2 million in missed revenue over the weekend. Despite their advanced tech stack, their inability to process real-time intent signals cost them both revenue and customer trust.

Every moment of delay isn’t just a missed opportunity, it's a customer lost to competitors who are faster, more agile, and better equipped with AI-driven real-time personalization engines.

When Your Stack Hold$ You Back

If you’re relying on traditional personalization strategies, your system might be quietly suffering from technical bottlenecks—problems that even major retailers have uncovered the hard way.

The big question is: do you want to keep running into these same roadblocks, or is it time to fix them?

Here’s what might be happening behind the scenes:

Batch Processing Bottlenecks

Most personalization engines process data in batches, often every 15-30 minutes. The catch? By the time decisions are made, they’re based on outdated user data. In fact, studies across 50+ platforms found 73% of personalization decisions were made with stale context.

Take a look at Nordstrom, for example. Their system processed user data every 20 minutes. During Black Friday, this delay cost them $3.2 million in lost sales because their personalization couldn’t react in real time.

Data Silos and API Hops

How well do your CDP, CRM, and e-commerce platforms talk to each other? If they rely on REST APIs, every “hop” adds 50-100ms of latency. Target discovered this issue the hard way: their system had 8 API hops, creating an 800ms delay before personalized recommendations even showed up. That’s a lifetime in e-commerce when speed is everything.

Sequential Processing Overhead

Is your system processing user data one step at a time? Sequential pipelines slow everything down. ASOS learned this firsthand when they found their system processed 27 user signals sequentially, adding 1.2 seconds of overhead. That’s over a second wasted before their machine learning models even started making predictions.

Monolithic ML Model Architecture

Here’s another one: are you also relying on a single, monolithic ML model for all your personalization decisions? Many platforms do this, but it’s a bottleneck. Fashion Nova, for instance, found their monolithic recommendation model took 900ms to load into memory, followed by another 400ms per inference. That’s 1.3 seconds per prediction—way too slow for real-time personalization.

AI-Driven E-Commerce: The New Standard for Retail Success

Industry leaders are processing millions of personalization decisions in milliseconds. From Netflix's 8 trillion daily events to Amazon's lightning-fast recommendations, the e-commerce architecture is undergoing a seismic shift.

Lets see how cutting-edge AI architectures are making this possible today.

Stream Processing: Where Every Millisecond Matters

Gone are the days of batch processing. Amazon's Kinesis Data Streams now processes 2.3M events per second with just 8ms latency. This enables real-time cart abandonment detection and price adjustments before customers even think about leaving. Technologies like Apache Kafka and Flink handle these real-time streams with sub-10ms latency, making every millisecond crucial.

Neural Networks: The Brain That Never Sleeps

Here's where it gets really interesting. Transformer models, like Zalando's BERT-based recommendation, slash decision time from 600ms to 50ms while boosting accuracy by 43%. The secret? Real-time feature computation and dynamic context adjustments, delivering faster, smarter decisions.

Distributed Cache Architecture: The Speed Foundation

Let's talk about handling scale. Shopify's architecture hits a 99.99% cache hit ratio, processing 5M requests/second with sub-5ms latency. Their trick? Multi-layer Redis caching, predictive warming, and cross-region syncing—all in under 5ms.

Micromodel Architecture: The Specialist Approach

Think of this as your AI council of experts. ASOS’s micromodel setup acts like an AI council, optimizing recommendations by combining 12 specialized models (price sensitivity, category affinity, etc.). Result? Response times drop from 900ms to 75ms, delivering sharper insights in record time.

Your Path to Personalization Transformation

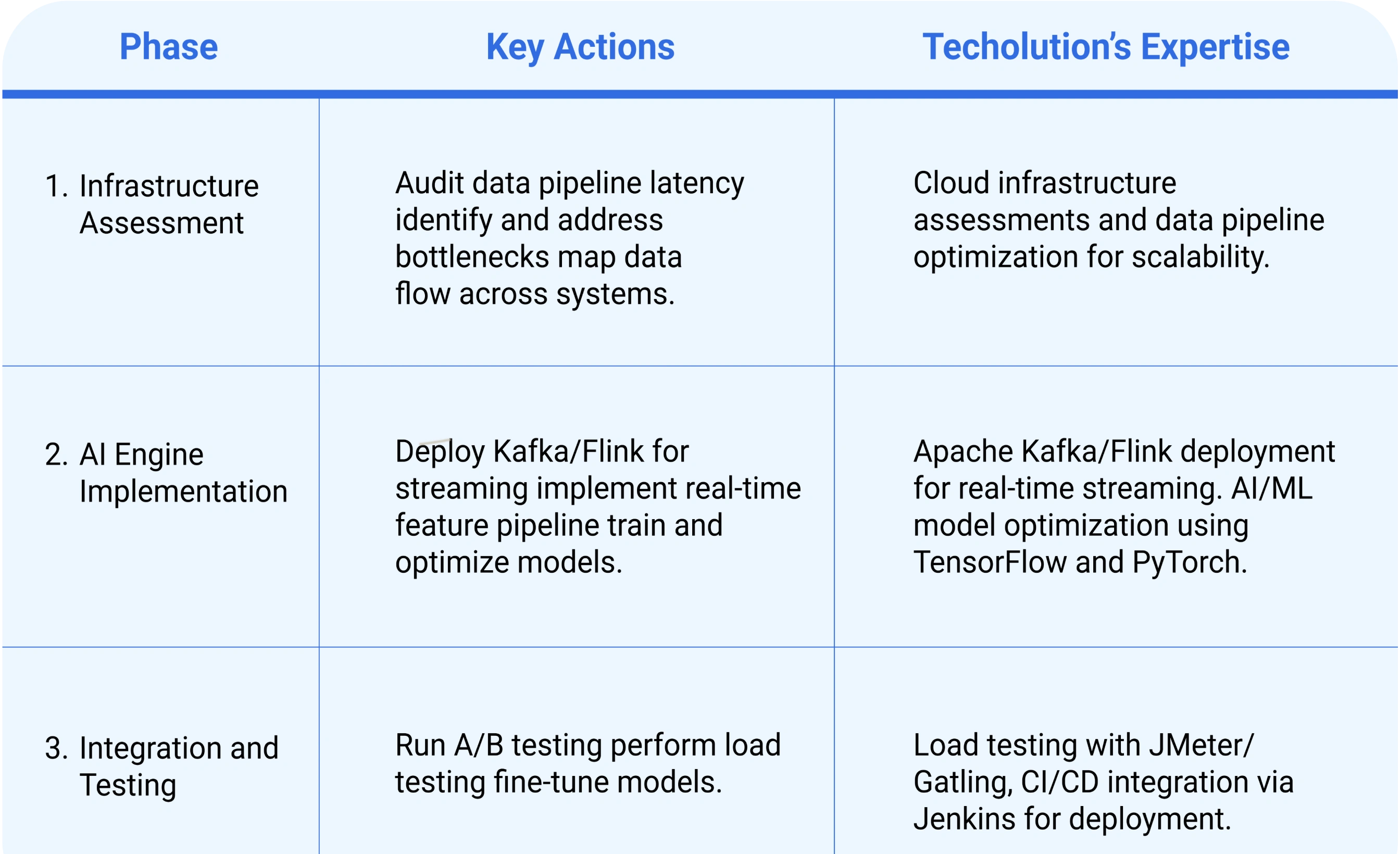

Ready to transform your personalization infrastructure? Here's your technical roadmap:

The Bottom Line

E-commerce is evolving at an unprecedented rate, driven by AI-powered, real-time personalization. If your current technical infrastructure is still rooted in rule-based personalization, you're not just missing out – you're falling behind.

Let’s do AI Right – not just by implementing it, but by transforming your system to deliver the personalized experiences your customers expect. Now’s the time to upgrade your architecture to support scalable, AI-driven solutions that deliver real-time insights, personalized content, and seamless customer journeys.

With the right tech stack, you can achieve up to 3x higher conversions by aligning your infrastructure with the future of e-commerce.

Are you ready to bridge the gap between your current system and the next-gen AI-powered personalization that your customers demand? Let’s talk about how we can implement a robust, scalable, and future-proof solution that drives both efficiency and revenue.

Frequently Asked Questions

1. What is cart abandonment in e-commerce?

When shoppers add items to a cart but exit before paying – often due to extra fees, slow pages, limited payment choices, or other friction.

2. How does AI help reduce cart abandonment?

It spots at-risk behavior in real time and fires personalised nudges – abandon-cart emails, retargeting ads, or chat prompts – to win back the sale.

3. What are common reasons for cart abandonment?

High shipping costs, compulsory account signup, sluggish load times, complicated checkouts, or casual browsing with no purchase intent.

4. Can AI improve e-commerce conversion rates?

Yes. By predicting intent, tailoring recommendations, and timing reminders, AI recovery systems typically boost conversions 2-3× over manual methods.

5. What metrics gauge AI effectiveness in cart recovery?

Track recovery rate, revenue recovered, email open/click rates, post-retargeting conversions, and customer lifetime value.