Turn Million-Dollar AI Experiments into Billion-Dollar Solutions

5 AI Resolutions Every Business Leader Should Make to Turn Investments into Measurable Success in 2025. 🫶

If you've ever read Harry Potter, you probably remember the Chamber of Secrets – a hidden place where a monster lived, quietly causing chaos while most people were oblivious to its presence. It's a lot like the echo chamber effect in AI.

On the surface, AI feels like magic. It gives us personalized recommendations, curates our social feeds, and automates decisions. But beneath this shiny surface, there's a hidden risk: the echo chamber effect. It's what happens when algorithms keep feeding you more of what you already know, slowly narrowing your perspective and reinforcing biases.

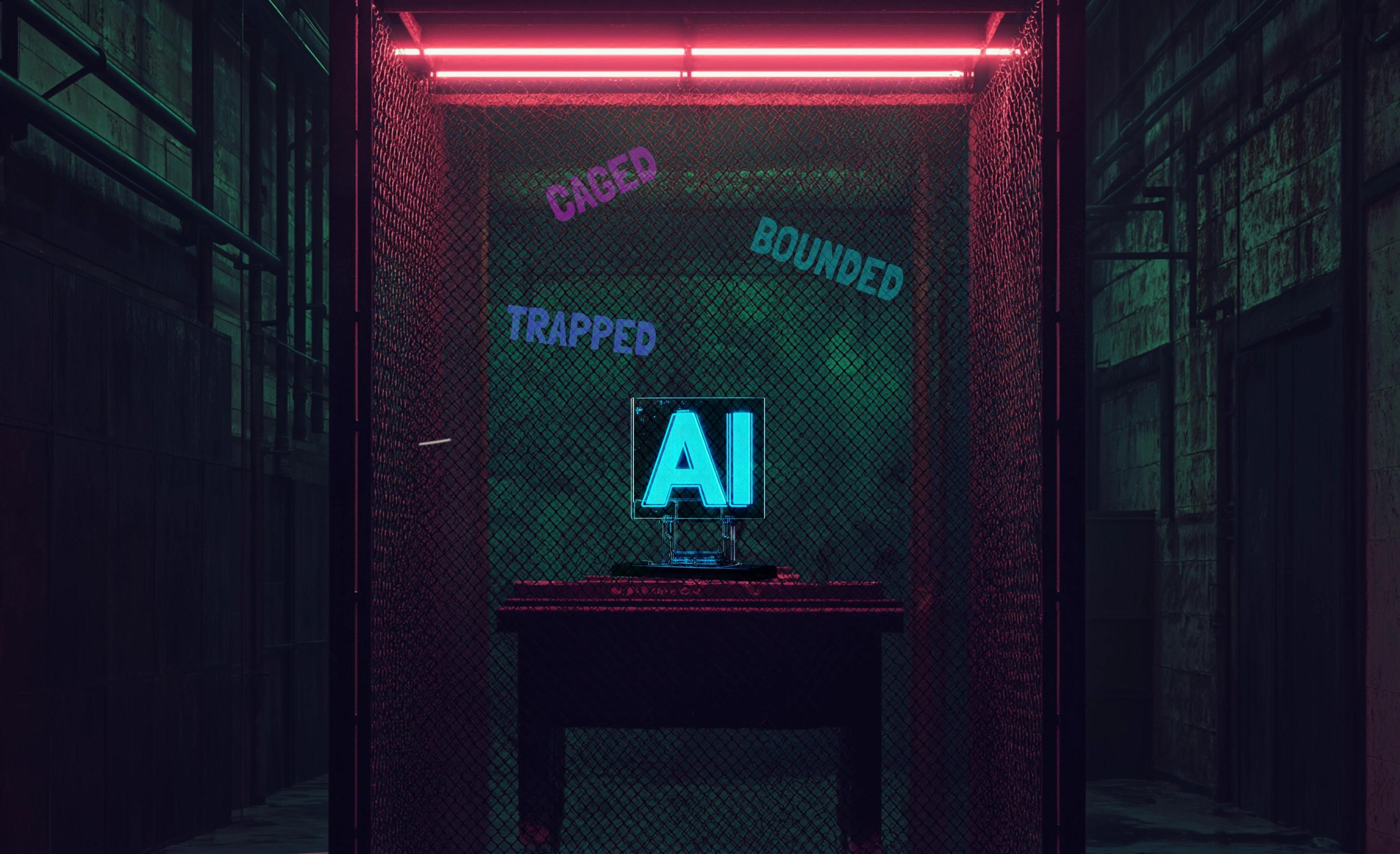

For individuals, it might mean seeing only one side of a story. For organizations, it can lead to flawed decisions, missed opportunities, and even reputational damage. If we don't address it, the echo chamber can trap us in a cycle of sameness – without us even realizing it.

What Is the Echo Chamber Effect?

At its core, the echo chamber effect happens when algorithms prioritize familiarity over variety. AI systems are designed to optimize engagement, so they keep showing you content, ideas, or products similar to what you've interacted with before. It feels comfortable—but it's limiting.

Imagine an online store only showing you the same few brands because that's what you've browsed before. Or a newsfeed that amplifies only one side of a political debate. Over time, you stop seeing the bigger picture, and your decisions reflect that tunnel vision.

Real-Life Examples of the Echo Chamber Effect

- Social Media – A Feedback Loop

Social platforms like Facebook and YouTube are built to keep users engaged. While their algorithms do this well, they often end up reinforcing political or ideological bubbles. A 2016 study published in Science found that social media users were significantly less likely to encounter views that challenged their beliefs – deepening divides rather than bridging them. - E-Commerce – Trapped by Recommendations

On platforms like Amazon, AI recommendations are based on your browsing and purchase history. While this creates convenience, it can also box you in. A 2020 MIT Sloan study revealed that over 70% of online shoppers felt "trapped" by repetitive recommendations, unable to discover new products or brands. - When Bias Creeps In During Corporate Hiring

Amazon's AI-driven hiring tool famously backfired because it was trained on biased historical data. The algorithm favored male candidates, reflecting patterns from past hiring decisions, rather than evaluating candidates on neutral, objective criteria.

Turn Million-Dollar AI Experiments into Billion-Dollar Solutions

5 AI Resolutions Every Business Leader Should Make to Turn Investments into Measurable Success in 2025. 🫶

Why Does This Happen?

The problem lies in how AI systems are designed. Their goal is to keep you engaged – whether that's through content, products, or decisions. To do this, they rely on patterns from your past behavior. But without diversity in data or regular oversight, these systems become echo chambers, reflecting only what they've already seen.

It's like if Hogwarts professors only taught Harry, Ron, and Hermione spells they'd already learned. Sure, they'd become really good at those spells – but they'd miss out on new knowledge and skills they'd need to face bigger challenges.

Breaking Free From the AI Echo Chamber

Overcoming the echo chamber effect just takes intentional effort. Here's how organizations and individuals can break free :

- Expand Your Data Horizons

AI models should be trained on diverse datasets that represent different perspectives. For example, LinkedIn has improved its job recommendations by incorporating feedback loops to ensure results reflect a wider range of candidates and opportunities. - Be Transparent About Algorithms

Let users see how decisions are made. Google's "Why This Ad?" feature is a step in the right direction, giving users insight into why they're seeing certain content—and the ability to adjust preferences. - Introduce Diversity by Design

Organizations should actively include dissenting or contrasting data points when building AI systems. For instance, Spotify's music recommendations combine AI with human curators, ensuring playlists include both familiar and fresh tracks. - Keep Systems Updated

Much like the Defense Against the Dark Arts class at Hogwarts, algorithms need regular refreshes to stay relevant. Auditing AI systems for bias and incorporating new data ensures decisions remain balanced and fair. - Pair AI With Human Judgment

AI works best when it collaborates with people. Human oversight can catch biases that algorithms might miss and provide a sense of accountability.

The Hidden Cost of Staying in the Echo Chamber

When organizations fail to address the echo chamber effect, the consequences are far-reaching:

• Stunted Innovation: Without exposure to new ideas, teams risk becoming stagnant.

• Flawed Decision-Making: A narrow perspective leads to poor strategies.

• Lost Trust: Users and stakeholders lose confidence when AI feels biased or limited.

But it doesn't have to be this way.

Step Out of the Chamber

Just like Harry Potter took action to confront the dangers of the Chamber of Secrets, organizations must actively address the risks hidden within AI systems. The echo chamber effect, although isn't inevitable, it's preventable. By diversifying data, promoting transparency, and keeping humans in the loop, we can unlock AI's full potential without getting trapped in its blind spots.